Differentiators

True AI performance is governed by physics

Edge AI & Computer Vision: Intelligence Without Latency.Sub-Headline: Deploy optimized inference models directly on your devices. We leverage TinyML, NPUs,and

Quantization to deliver privacy-first, real-time AI solutions that don’tdepend on the cloud

0 +

Hardware-Aware Model Optimization

0 +

Synthetic Data

Generation

0 +

From TinyML to

GenAI

0 +

Embedded

Platforms delivered

Overview

Optimizing performance, cost, and scalability to

deliver long-term value.

Hardware-Aware Model Optimization:

We understand the silicon. We profile models layer-by-layerto ensure they map efficiently to the specific NPU architecture (e.g., utilizing the tensor cores on anNXP i.MX8M Plus or the Ethos-U microNPU on an ARM Cortex-M55).

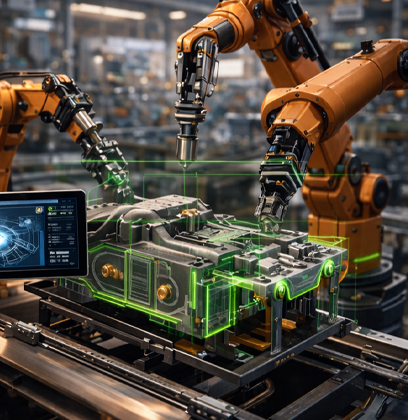

Synthetic Data Generation:

Real-world data is often scarce, especially for “rare events” likemanufacturing defects. We utilize generative techniques and 3D rendering to create synthetictraining datasets, bootstrapping model performance before physical datacollection is complete.

From TinyML to GenAI:

We are pushing the boundaries of what’s possible on the edge,experimenting with Small Language Models (SLMs) and quantized Generative AI models that runlocally, enabling natural voice interfaces without cloud latency.

Overview

Technical Capabilities Deep Dive

- Defect Detection: Automated optical inspection (AOI) systems for manufacturing lines that detectscratches, dents, or misalignments at high speed. 21

- Object Tracking & Counting: People counting for retail analytics, vehicle tracking for trafficmanagement, and PPE detection for construction safety.

- Facial Recognition & Biometrics: Secure, local authentication systems that respect user privacy bystoring only non-reconstructible embeddings locally. 20

- Predictive Maintenance: Analyzing the acoustic signature of motors and bearings to detectanomalies (grinding, imbalance) weeks before failure. 15

- Voice Interfaces: Keyword spotting (Wake Word) and local command recognition using highlyoptimized models that run on DSPs, ensuring functionality even offline

Bringing AI to microcontrollers (MCUs)

- TensorFlow Lite for Microcontrollers (TFLite Micro): Porting and optimizing models to run onCortex-M devices with <256KB RAM.

- Sensor Fusion: Combining data from accelerometers, gyroscopes, and magnetometers usingKalman filters and neural networks for precise motion tracking in wearables.

Scalable, Enterprise-Grade Tech Stack

Frameworks

TensorFlow Lite, PyTorch Mobile, Edge Impulse,ONNX Runtime, OpenVINO, TensorRT

Algorithms

CNNs (MobileNet, EfficientNet, YOLOv8),RNNs/LSTMs, Transformers (ViT), GANs,Autoencoders

Hardware Targets

NVIDIA Jetson (Nano/Orin), NXP i.MX8M Plus(NPU), STMicroelectronics (STM32Cube.AI),Raspberry Pi, Hailo AI, Google Coral TPU

Tools

Kubeflow(MLOps),CVAT(Annotation),AkhilaFlex AI Module, Docker

Techniques

Quantization (Int8/Int4), Pruning, KnowledgeDistillation, Federated Learning

Languages

C (C99/C11), C++

(C++14/17/20),

Rust (Embedded HAL)

Assembly (ARM/RISC-V)

Industries Served

Manufacturing

Zero-defect manufacturing through real-time visual inspection and roboticguidance. 22

Healthcare

On-device arrhythmia detection in Holter monitors; fall detection systems for elderlycare.1

Security

Intelligent perimeter monitoring systems that filter out false alarms (animals, wind) andalert only on human/vehicle intrusion.

Frequently Asked Questions

At Akhila Labs, embedded engineering is the foundation of everything we build. We go beyond writing firmware that runs on hardware—we engineer systems that extract

maximum performance, reliability, and efficiency from the silicon itself.

Can you integrate LiDAR, cameras, and other sensors for autonomous navigation?

Yes. We design sensor fusion pipelines using EKF/UKF, implement SLAM for GPS-deniedenvironments, and integrate multiple sensor modalities (LiDAR, RGB, depth, radar) for robustautonomous navigation.

How do you test autonomous drone behavior before real-world deployment?

We use simulation-first development with PX4 SITL/HITL in Gazebo/Ignition for virtualenvironments, hardware-in-the-loop rigs, and staged field trials with progressive autonomylevels

Subscribe to the Akhila Labs Newsletter

Get the latest insights on AI, IoT systems, embedded engineering, and product innovation — straight to your inbox.

Join our community to receive updates on new solutions, case studies, and exclusive announcements.