ROBOTICS & AUTONOMOUS

DRONES SOLUTION

We architect flight stacks, perception, and control for autonomous robots

and UAVs using PX4, ROS/ROS2, advanced sensing, and edge AI for GPS-denied

and industrial environments

Key Value Propositions

Akhila Labs builds compliance-first, medical-grade wearables with end-to-end engineering to enable fast, scalable, and regulatory-ready digital health solutions.

Industry

Problem Statement

Autonomous drones and robots must operate safely and reliably in complex, dynamicenvironments. Your team is likely facing

Akhila Labs enables the transition from prototype development to scalable deployments aligned with regulatory standards.

![]()

Selecting the Right Stack

PX4 vs. ArduPilot, ROS 1 vs. ROS 2, custom vs. off-the-shelf—each choice has trade-offs in real-time performance, community support, and long-term maintainability.

![]()

Sensor Fusion Challenges

Integrating heterogeneous sensors (LiDAR, stereo/RGB cameras, IMUs, GNSS, UWB, radar) with tight timing and bandwidth constraints while maintaining real-time control loops.

GPS-Denied Navigation

Standard commercial autopilots rely heavily on GPS. In underground mines, inside pipes, warehouses, and dense urban areas, GPS is unavailable or multipath-degraded, causing rapid drift and crashes within seconds.

Latency & Compute Trade-Off

Running heavy SLAM and AI perception models on battery-powered platforms drains power quickly. Balancing real-time flight control (MCU) with high-level mission compute (GPU/FPGA) is a complex systems engineering challenge.

Middleware Bottlenecks

Legacy ROS 1 architectures suffer from a single point of failure (roscore) and lack real-time determinism, causing unpredictable latency and system fragility

Safety, redundancy, and failsafes

BVLOS (Beyond Visual Line of Sight) and industrialoperations require proven fallback behaviors—loss-of-link RTH, geofencing, and safelanding logic that work reliably in edge cases.

Our Solution

Approach

ROS 2 & PX4 Integration

We leverage ROS 2 (Humble/Iron) for its distributed architecture, real-time capabilities, and security.

![]()

Middleware Bridge

We utilize Micro-XRCE-DDS to bridge PX4 running on real-time hardware (STM32H7, Pixhawk FMUv5/v6) with companion computers (Jetson, Raspberry Pi, NXP i.MX). This enables ROS 2 nodes to publish/subscribe to uORB

topics directly, yielding high-bandwith

![]()

Custom Flight Modes

We develop custom PX4 flight modes in C++ for mission-specific behaviors (pipe inspection, wall-following, precision landing on AprilTags, formation flight) not available in standard distributions.

![]()

ROS 2 Native

Unlike legacy ROS 1, ROS 2 offers deterministic execution, DDS middleware, and built-in security (SROS2) suitable for production and enterprise deployments.

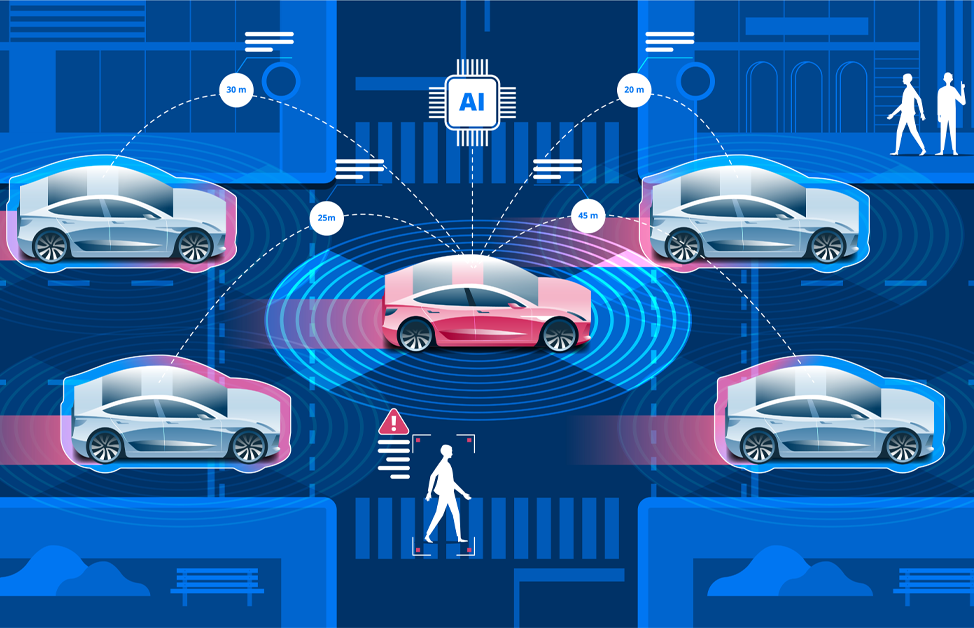

Perception & Localization (SLAM)

Multi-Modal Sensor fusion for

GPS-Denied Environments.

Visual-Inertial Odometry (VIO):

VINS-Fusion or OpenVINS tightly couple camera tracking with high-rate IMU data

to deliver centimeter-level positioning without GPS.

LiDAR Mapping:

LIO-SAM or Fast-LIO2 with 3D LiDARs (Velodyne, Ouster, Livox) generate real-time

3D occupancy maps and loop closures, even in dark or featureless environments.

Loop Closure & Relocalization:

Visual loop detection and global relocalization enable drift recovery and

reliable operation in large, complex spaces.

Obstacle Avoidance & Path Planning

Autonomous Obstacle Avoidance and Navigation Planning

Local Planning (ROS 2 Nav2):

PX4-Avoidance and the ROS 2 Navigation Stack use depth cameras

(Intel RealSense D435i, Stereolabs ZED) to build a local 3D voxel grid

and dynamically avoid obstacles without pre-mapped environments.

Global & Real-time Planning:

A* and RRT* algorithms generate collision-free paths while real-time

replanning at 10–20 Hz enables instant adaptation to new obstacles

and mission changes.

Hardware Acceleration & Compute

To Maximize Flight Time and Responsiveness

FPGA Integration:

Using Xilinx Kria or Zynq platforms, we implement hardware-accelerated image

processing pipelines to offload compute-intensive tasks from the CPU.

NPU Optimization:

Neural networks are optimized with TensorRT on NVIDIA Jetson for high

frame-rate inference with low power consumption.

Multi-core Scheduling:

Workloads are distributed across the flight controller, companion computer,

and accelerators using ROS 2 nodelets and custom executors to minimize

latency and avoid overload.

Connectivity & Telemetry

Reliable Connectivity & Real-Time Telemetry

Short-range Telemetry:

900 MHz / 2.4 GHz SiK radios provide reliable command, control,

and low-latency feedback.

BVLOS Backhaul:

LTE/5G with VPN or ZeroTier enables secure long-distance operations.

Edge–Cloud Split:

Local autonomy is combined with cloud-based route planning,

geofencing, fleet analytics, and anomaly detection.

Use Cases & Applications

Akhila Labs supports a wide spectrum of healthcare and wellness applications:

Pipeline & utility inspection

Autonomous drones navigating underground pipes,tunnels, and conduits using SLAM and LEDs, detecting corrosion, cracks, and blockageswithout GPS.

Construction & site monitoring

Daily 3D mapping of construction progress usingphotogrammetry, with to track project timelines andanomalies.

Warehouse inventory drones

Autonomous scanning of high shelves with barcodereaders and weight sensors, WMS(Warehouse Management Systems).

Last-mile delivery

Drones equipped with precision landing capabilities using AprilTagor QR code fiducials for secure, autonomous package drop-off.

Precision agriculture

Autonomous scouting and spraying drones with multispectralcameras, live vegetation indices to optimizepesticide/herbicide use.

Search and rescue (SAR)

Swarms of drones covering large areas to detect thermalsignatures of missing persons in dense forests or disaster zones.

Industrial asset inspection

Power lines, solar farms, wind turbines, bridges, on-board LiDAR and thermal cameras, generating 3Dmaps anddefect reports.

Confined space inspection

Collision-tolerant drones designed for boilers, tanks, andsilos, reducing the need for human entry into hazardous environments.

Security & perimeter patrol

Autonomous UGVs (ground robots) performing scheduledpatrols with thermal imaging for intrusion detection, auto-docking for charging.

Autonomous mining survey

Volumetric measurements of stockpiles and pit geometrywithout disrupting ongoing operations.

Technologies & Tool

Robotics Middleware

ROS 2 (Humble, Iron), Micro-XRCE-DDS, MAVLink, CycloneDDS, FastDDS

Flight Control

PX4 Autopilot, ArduPilot, Pixhawk-compliant FMUs (STM32H7), Nuttx/embedded OS

SLAM & Perception

VINS-Fusion, ORB-SLAM3, Cartographer, LIO-SAM, RTAB-Map, OpenVINS, ROS 2 NavigationStack (Nav2)

Simulation & Development

Gazebo (Ignition), AirSim, Webots, Unreal Engine, PX4 SITL/HITL

Sensors

Intel RealSense (depth), Ouster/Velodyne (LiDAR), FLIR (thermal), Bosch IMUs,TeraRanger(rangefinder), GNSS/RTK, UWB

Vision & AI

OpenCV, YOLOv8, TensorRT, PyTorch, TensorFlow, mmdetection, tracking libraries

Frequently Asked Questions

At Akhila Labs, embedded engineering is the foundation of everything we build. We go beyond writing firmware that runs on hardware—we engineer systems that extract maximum performance, reliability, and efficiency from the silicon itself.

Can you integrate LiDAR, cameras, and other sensors for autonomous navigation?

Yes. We design sensor fusion pipelines using EKF/UKF, implement SLAM for GPS-deniedenvironments, and integrate multiple sensor modalities (LiDAR, RGB, depth, radar) for robustautonomous navigation.

How do you test autonomous drone behavior before real-world deployment?

We use simulation-first development with PX4 SITL/HITL in Gazebo/Ignition for virtualenvironments, hardware-in-the-loop rigs, and staged field trials with progressive autonomylevels

Subscribe to the Akhila Labs Newsletter

Get the latest insights on AI, IoT systems, embedded engineering, and product innovation — straight to your inbox.

Join our community to receive updates on new solutions, case studies, and exclusive announcements.